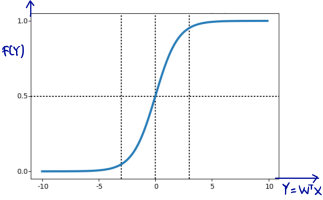

Logistic regression is an extension of regression method for classification. In the beginning of this machine learning series post, we already talked about regression using LSE here. To use regression approach for classification,we will feed the output regression into so-called activation function, usually using sigmoid acivation function. See piture below.

Sigmoid function will have output with s-shape like picture above whose output range is from zero to one. For classification, logistic regression is originally intended for binary classification. Regarding picture above, our output regression is fed sigmoid activation function. We will classify input to

when the output is closed to 1 (formally when

) and classify to $class_2$ when the output is closed to 0 (formally when

) To do that, we can achieve by maximizing out likelihood using MLE (Maximum Likelihood Estiamtion). Continue reading “How Logistic Regression Works for Classification (with Maximum Likelihood Estimation Derivation)”